The algorithm uses additional information from:

- google chrome browser addon, allowing the user to block non-quality sites

- blocking site features provided in google search

- social sharing: not the actual number of likes, but the links that a website gets as a result of facebook share or retweet.

- blocking site features provided in google search

- social sharing: not the actual number of likes, but the links that a website gets as a result of facebook share or retweet.

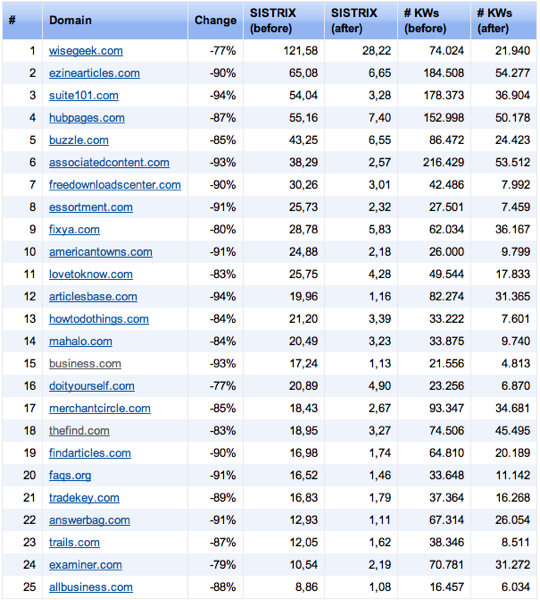

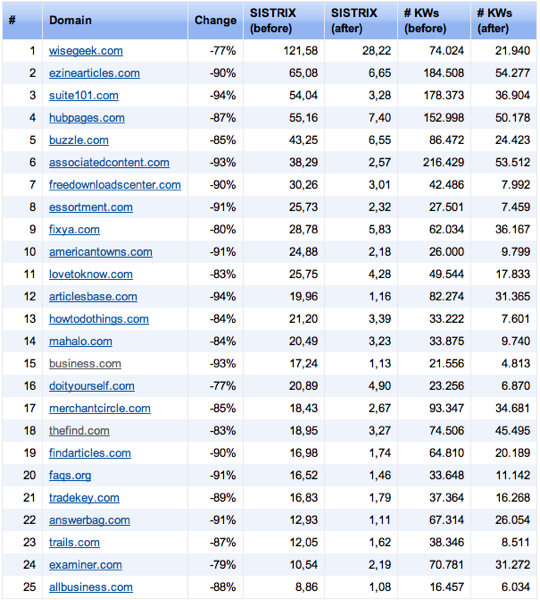

Here is a sample table showing traffic decline after the Panda update has been applied to popular affected websites, which is somewhere between 70-90%. (note that the company providing the results is measuring a particular set of words, and not the whole long tail traffic of the mentioned websites)

Another triggering factor of the update is the lack of user satisfaction measured with the amount of time spent on one page before the user clicks on the back button returning to search results in order to find another website.

Here is what you can do in order to get your website less affected from the update:

1. Use noindex meta tag on thin or of low-quality content. Example: 2nd level category pages, containing only links with no actual content.

2. Delete all the copied content and make the pages return header status 404 not-found.

3. Reduce the number of adverts or additional page elements, appearing before the actual content. (above the fold), or make sure that they're blending within the content:

| Site layouts that highlight content | Site layout that pushes content below the fold | |

4. Use google analytics to find webpages, with the lowest time on-page, and use the same methods as 1) to restrict them.

5. By default use meta noindex to all new pages until they have quality content.

6. Don't use white text on a black background.

7. Ensure maximum user time to be spent on a page.Next, follow tips on website recovery from Penguin / Panda update. They are not well known to the general audience that's why I decided to share them here.

Redirects

Check with curl command if all your website resources respond with 200 OK, and are not using 301 or 302 redirects. The reason is that curl shows different/real/not cached header status codes than the default's browser/firebug.

Usage from a command prompt: curl -IL http://your_website.com

Next do check your main page, category pages, inner content pages, JavaScript and CSS files. Just make sure that they return 200 OK response.

The same procedure can be done using the Screaming Frog SEO spider.

DNS

Check the latest propagated DNS settings of your website. Most of the DNS checking websites, as well as nslookup command, provide cached DNS name server responses, even if you clear (flush) your system cache. That's why I recommend checking your website name servers using http://dns.squish.net/ website. Also make sure that the IP address of your DNS server (its A record), matches the NS entry of your domain server. After making sure that there are no problems go to google webmaster tools and fetch your URL via fetch as google.

Removing duplicate content

When doing URL removals in webmaster tools, make sure that you type the URL manually from the keyboard or if it is too long to use the dev tools console to explore the URL and copy and paste it directly into the input box. If you just select & copy the URL with the mouse from the search results, there's a high chance for some invisible characters to be added next to it like %20%80%B3, etc. This will make your request legitimate, but don't be surprised if you see the already removed URL reappearing in the index. For this reason, a few days after the removal you should re-check google's index to see if there are any occurrences of the URL still present. Some not so obvious URLs to be indexed are your .js, .swf or .css files.

In order to effectively remove content, it should either return 404-not found result, or 1) has noindex, nofollow meta attribute and 2) is not present in the robots.txt file.

Also if you have access to .htaccess file here is how to add canonical tags to .swf or .json files:

Header set Link: '<http: canonical_file.swf="" domain.com="">; rel="canonical"'</http:>

This way any existing link power will accumulate over the canonical url.

Robots.txt, noindex, nofollow meta tags

In order to reveal indexed but hidden from google SERPS content, you should release everything from your robots.txt file (just leave it empty), and stop all .htaccess redirects that you have.

This way you will reveal hidden parts of the website (like indexed 301 redirect pages), which are in the index, and will be able to clear them up.

The other reason behind this change is that, if you have URLs in robots.txt they won't be crawled and their link rank recalculated as you like, even if those are being marked with the meta tag noindex, nofollow. After the cleaning procedure, don't forget to restore the contents of your robots.txt file.

Google Penguin

This update previously known as anti-spam or over-optimization, now is more dynamic and is being recalculated much more often. If you are not sure which exactly Google quality update affected your website it's very hard and slow to make random changes and wait for feedback i.e. traffic increase. So here I'm suggesting one convenient logical method on how to find out:First, get your site visit logs from Google Analytics or any other statistical software. We are interested in the number of total unique visitors. Then open this document: http://moz.com/google-algorithm-change. Next, check whether on the mentioned dates or few days after starting from February 23, 2011 you have a slight drop in traffic. If true your pages have been hit and it's time to take measures against this specific update. But first let's make sure that your content conforms with the following techniques, which are recommended in forums, articles, video tutorials and in non-free SEO materials.

Over-optimization:

- keyword density above 5%, stuffed bold/emphasized(em) keywords in meta keywords, description, title as well as h1...h6, img title and alt attributes

- leaving intact the link to the blog theme author/powered by

- adding links with keywords variations in the anchor text on the left, top or bottom section of the website (which in reality receive almost no clicks)

- creating multiple low quality pages targeting same keywords, thus trying to be perceived as authority on the subject

- creating duplicate content by using multiple redirects that (301, 302, JavaScript, .htaccess etc...)

- overcrowding the real content with ads so that it appears way low behind the page flow, thus requiring lots of scrolling activity to be viewed

- using keywords in: page url and in domain name

Link schemes:

- being a member of web-ring networks or modern link exchanges. Some sophisticated networks have complicated algorithms which choose and point proper links to your newly created content thus making it appear as solid

- having low perceived value / without branding or gained trust during the years, but receiving (purchased) multiple links from better ranking websites

- Penguin 1 was targeting sites having unnatural / paid links analyzing only the main page of a particular website. With the time 'clever' SEOs started buying links not only pointing to the main, but to inner pages or categories - leading to Penguin 2 where such inner pages, having spammy link profiles have been taken into account.

- more and more people are using their comments to sneak in their links. The old/new spamming technique goes like this: they say something half meaningful about your content or something that sounds like a praise and then put their website link in the middle of that sentence. Please don't approve such comments and report them as SPAM.

- rewrite or consolidate the questionable content with few other posts

- delete the low quality page if it already has a 'not so clean' backlink profile and the back-linking site doesn't want to remove its links

-use Google Disavow tool to clean up website's back-linking profile

Don't forget that like every Panda update Google makes its changes continuously using multiple tests and corrections over the algorithms. So some fluctuations while examining the SERPS are possible to be observed.

Google Panda

As you may know, there is an ongoing algorithmic update named "Panda" or "farmer", aimed at filtering out: thin / made for Adsense / copied content from Google's search index. The algorithm mainly pushes websites down in SERPS, aiming to replace them with author-owned content websites, which could be more useful to the visitors.The actual filter has an automatic semantic algorithm, which is triggered by various factors in order to classify content as useful. Triggering factors remain secret, but it is known that websites who serve content of mixed quality (both bad and good) to their visitors will have their SEO rankings impaired.