Ever wondered why a particular website might get fewer and fewer visits?

One reason for this might be that it is inside google's sandbox, so it gets no traffic from Google queries. In such situations, the following could be experienced:

1. Drop in the total number of website visitors coming from google.com.

2. A sudden drop in google's PageRank of all website pages.

3. When querying google on specific keywords - the website appears in the last 2-3 pages of the search results or is totally banned from google's listing.

How to check if the website is within Sandbox?

If you wish to check whether a sandbox has been applied to a particular website then try the following methods:I Method

Use this web address to check the indexing of your website pages against a specific keyword:

http://www.searchenginegenie.com/sandbox-checker.htmII Method

which is much more reliable, just run your web browser and go to http://www.google.com

then type in the search box:

www.yourwebsite.com -asdf -asdf -asdf -fdsa -sadf -fdas -asdf -fdas -fasd -asdf -asdf -asdf -fdsa -asdf -asdf -asdf -asdf -asdf -asdf -asdfIf your website appears in the search results and has good keyword ranking then your website is in google's sandbox.

III Method

Run your web browser, go to http://www.google.com and type:

site:www.yourwebsite.comIf there are no results found and then your website is out of google's indexing database. The difference between non-indexed fresh websites and sandboxed ones is that on the sandboxed you'll not see: If the URL is valid, try visiting that web page by clicking on the following link: www.yourwebsite.com

IV Method

When running google query then add at the end of the URL:

&filter=0

This will show all the results from the primary and supplemental google index of your website. If your website has been penalized then its results will reside in the supplemental index.

How to get your website out of Google's Sandbox

Next follows a guide on how to get one website out of google's sandbox having following techniques applied:* Have a website structure not deeper than 3rd level (i.e: don't put content to be reachable via more than 3 links away, because the crawler/spider might stop crawling it. )

* rewrite the meta tags to explicitly manifest which pages should not be indexed. For this you should put in the header section of a page:

meta name="robots" content="index, follow" - for webpages that will be indexed* delay the crawling machines

meta name="robots" content="noindex, nofollow" - for webpages that you don't want to be indexed

This is important especially if your hosting server doesn't provide fast bandwidth :

In your robots.txt file put:* remove the duplicate or invalid pages from your website that are still in google's index/cache: First prepare a list of all the invalid pages. Then use google's webpage about urgent URL removal requests:

User-agent: * Crawl-Delay: 20 You can also adjust the Crawl delay time.

https://www.google.com/webmasters/tools/url-removalEnsure that those pages are no longer indexed, by typing your full website address in google with site:your_website.com. If there are no results this means that you've succeeded to get the pages out of google's index. It may sound strange, but this way you can reindex them again. When ready remove all the restrictions that you might have from .htaccess and webpage headers(noindex,nofollow)

Next, go to http://www.google.com/addurl/?continue=/addurl , put your website in the field for inclusion and wait for the re-indexing process to start.

During the waiting process, you can start getting links from forums and article directories to your quality content, which should point not only to your top-level domain but also to specific webpages.

For example: not only <a href="www.website.com"> but <a href="www.website.com/mywebpage1.html" >

* remove javascript redirects

Check whether are you using in your website meta-refresh javascript redirects. For example:

meta equiv="refresh" content="5; url=http://www.website.com/filename.php"

If so remove them, because they are assumed as spam by Google's Bot.

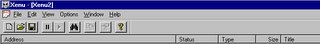

How: You can check your whole website by using the software as Xenu Link Sleuth

http://home.snafu.de/tilman/xenulink.htmlDownload and start the program. The whole process is straightforward - just type in the input box your website address

and start the check. (click on File->Check URL. That brings up a form for you to fill in with your website's URL).

and start the check. (click on File->Check URL. That brings up a form for you to fill in with your website's URL).This tool will check every page on your website and produce a report. In the report if you see 302 redirects - beware and try to fix them too. Using Xenu you could also check your website for broken links, etc.

* disavow 302 Redirects from other sites

Check if websites linking to you give HTTP response code 200 OK

In google search box type allinurl: http://www.yoursite.com Then check every website other than yours by typing them here

http://www.webrankinfo.com/english/tools/server-header.phpand look for HTTP response code 200 OK.

If there are any that give 302 header response code then try to contact the administrator of the problematic website to fix the problem. If you think that they are stealing your Page Rank - report them to google report spam page

http://www.google.com/contact/spamreport.htmlwith a checkmark on Deceptive Redirects. As a last resort, you can also place the URL in google's disavow tool to clean up your backlink profile: https://www.google.com/webmasters/tools/disavow-links-main

For the next steps you will need access to your web server .htaccess file and have mod_rewrite module enabled in your Apache configuration:

* Make static out of dynamic pages

Using mod_rewrite you could rewrite your dynamic page URLs to look like static ones. So if you've got a dynamic .php page with parameters you could rewrite the URL to look like a normal .html page:

look_item.php?item_id=14HOW: You have to add the following lines to your .htaccess file (placed in the root directory of your web server):

for the web visitor will become:

item-14.html

RewriteEngine onand transfer previously accumulated PR and backlinks

RewriteRule item-([0-9]+)\.html http://your_website.com/sub_directory/look_item.php?item_id=$1 [L,R=301]

type in Google's search box:

site: http://your_website.comThis query will show all website indexed pages. Since you've been moving to search engine preferred (static .html) URLs it would be good to transfer the accumulated dynamic .php URLs PR and links to the corresponding static .html URLs. Here is an example about transferring a .php URL request to a static page URL of the web site.

HOW: Add the following line in your .htaccess file:

RewriteRule look_item\.php http://website.com/item.html [L,R=301]where 301 means Moved Permanently, so the Search Engine Bot will map and use http://website.com/item.html instead of look_item.php as a legitimate source of information.

* Robots.txt check

In order to avoid Google from spidering both .html and .php pages thus assuming them as duplicate content which is bad, place a canonical tag for the proper web-page version that you prefer and empty your robots.txt file, so that google will consolidate both PHP and HTML pages into one preferred .html version:

Important Note: If you have already .php files in the google index and don't want to use the canonical header tag, you can use meta attributes nofollow, noindex placed in the .php versions of the files, which requires little bit more effort.* Redirect www to non-www URLs

Just check the indexing of your web site with and without the preceding "www". If you find both versions indexed, you are surely losing PageRank, backlinks and promoting duplicate content to Google. This happens because some sites can link to you with the http://www, and some prefer to use the pure domain version HTTP://. It's hard to control the sites linking to your website whether they link using "www" or "non-www". This time Apache Redirect and Rewrite rules come to help to transfer the www-URLs of your website to non-www URLs. Again to avoid PR loss and duplicates you will want your website URL to be accessible from only 1 location.

HOW: Place at the end of your robots.txt the following lines:

RewriteCond %{HTTP_HOST} ^www\.your_website\.com [nc]When finished with the redirect from www to non-www and https to http versions of your website or vice-versa, specify your preferred version in google webmaster tools.

RewriteRule (.*) http://your_website.com/$1 [R=301,L]

* Redirect index.php to root website URL

There is one more step for achieving non-duplicated content. You must point your index.html, index.htm, index.asp or index.php to the ./ or root of your website.

HOW: Insert in your robots.txt the following lines before the previous mentioned two lines:

RewriteCond %{THE_REQUEST} ^[A-Z]{3,9}\ /subdomain/index\.php\ HTTP/Note: If your website is hosted under a subdomain fill its name in the /subdomain part. If not just delete the /subdomain . You can replace index.php with index.html, index.asp or whatever suits you.

RewriteRule index\.php http://yourwebsite.com/subdomain/ [R=301,L]

* Have custom error 404 page:

in your .htaccess file type:

ErrorDocument 404 /your_website_dir/error404.htmlThen create a custom webpage named error404.html to instruct the user what to do when came across a non-existent page. Then check if the 404 page actually returns 404 not found, and not 200 OK header status code.

Congratulations, by following those steps your website will be re-indexed soon. In a few days it will be re-crawled and out of the sandbox and possibly the advises below will help you to achieve better indexing for your website..

Cheers!