You can learn a bit more about the SEO topic from my online course.

Some examples and fixes of thin content follow:

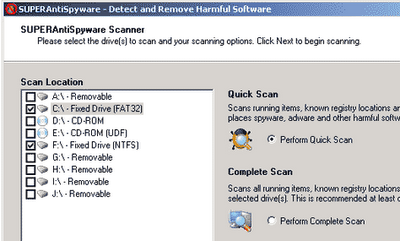

1. Target: Duplicate content caused by sessions, referral or page order/filtering parameters appended to the end of the page like: ?orderby=desc that doesn't change the actual content on the page or just reorders the same content. Also if your website has AJAX back button navigation, or just a login system with session IDs appended to the end of the URL, as well as frames with tracking ids attached. Just look at the different URLs on the picture below, representing same content:

URL parameters, like session IDs or tracking IDs, cause duplicate content, because the same page is accessible through numerous URLs.Solution (to session appended URLs):

After long searching the following technique from webmasterworld's member JDmorgan succeeded to get ~90% of my website content fully indexed. Here is how to implement this technique on practice using apache .htaccess.

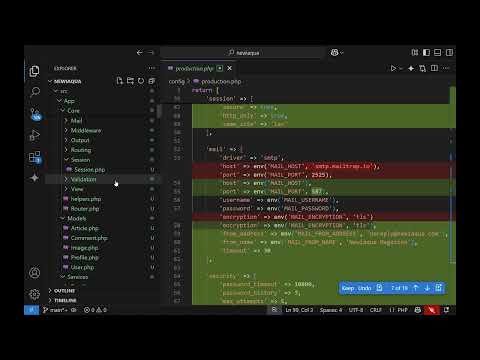

Just put the following lines in your .htaccess file and test:

1) Allow only .html pages to be spidered

#allow only .html requests2) Remove all the sessionid from the URL parameters, when a page is being called by bots

RewriteCond %{query_string} .

RewriteRule ^([^.]+)\.html$ http://your_website.com/$1.html? [R=301,L]

#remove URL sessionids

RewriteCond %{HTTP_USER_AGENT} Googlebot [OR]

RewriteCond %{HTTP_USER_AGENT} Slurp [OR]

RewriteCond %{HTTP_USER_AGENT} msnbot [OR]

RewriteCond %{HTTP_USER_AGENT} Teoma

RewriteCond %{QUERY_STRING} ^(([^&]+&)+)*PHPSESSid=[0-9a-f]*&(.*)$

RewriteRule ^$ http://your_web_site.com/?%1%3 [R=301,L]

2. Target: 301 header redirects chain

A chain of 301 redirects could cause you a loss of PageRank i.e. lead to thin content. So please check that your 301 redirects are final i.e. they point to an end page and not to another redirect page. You can use LiveHTTPHeaders extension to do this kind of check.

Solution: fix your redirects!

3. Target: Because it is thin

Pages with content < 150 words or 10 visits during the whole year. You can check out the latter with Google analytics by looking at your content pages, ordered by page-views setting time range of 1 year backward. Find and fix those URLs!

Solution: Either remove/nofollow or block with robots.txt or rewrite/merge the content.

4. Target: Heavy internal linking:

By placing multiple links on a page to pages/tags/categories you are reducing the particular page's power. This way only a few pages supported by lots of incoming internal links are considered as not thin by Google Panda algorithm.

Solution: You need to clean up the mistaken links on that page by adding rel = "nofollow" to the outgoing links or better remove (rearrange to bottom) the whole section (tag cloud, partner links, etc...) from your website.

5. Target: Percentage of URLs having thin content

Google maintains two indexes: primary and supplemental. Everything that looks thin or not worthy (i.e. doesn't have enough backlinks) goes to the supplemental. Factor when determining thin content is the percentage of indexed and available via search to its supplemental pages a particular website might have. So the more pages you maintain in Google's primary index the better. It is possible that your new (already fixed) and old (thin) content now fights for position on Google's search. Remember that the old content already has Google's trust with its earlier creation date and links pointing to it, but it is still thin!

Solution: Either redirect the old to the new URL via 301 permanent redirect or log in at Google's Webmaster tools then from Tools->Remove URL typed your old URLs and wait. But before this you'll have to manually add meta noindex, nofollow to them and remove all restrictions in your robots.txt file in order to get the Google to apply the index,nofollow attributes.

Q: How to find thin content URLs more effectively?

Sometimes when you try to find indexed thin content via: site:http://yourwebsite.com you won't see their full list.

Solution:

- use the parameter "-" in your query:

First, do a site search site and then consecutively remove the known and valid URLs from the results.

"site:http://yourwebsite.com -article"

will remove all URLs like article-5.html, article-100.html, etc... This way you'll see the thin content pages more quickly. - when you know the thin content page name just do

site: http://yourwebsite.com problematic_parameter

( ie.:"site:http://yourwebsite.com mode" this will show all of the indexed modes of your website like: mode=new_article, mode=read_later, mode=show_comment etc... Find out the wrong ones and do a removal request upon them. )

Enjoy and be welcomed to share your experience!

---

P.S. If you don't have access to .htaccess file you could achieve the above functionality using the canonical tag - just take a look at these SEO penalty checklists series.

More information on the dynamic URLs effect to search engines as well as how to manage them using yahoo's site explorer you can find here: https://web.archive.org/web/20091004104302/http://help.yahoo.com/l/us/yahoo/search/siteexplorer/dynamic/dynamic-01.html